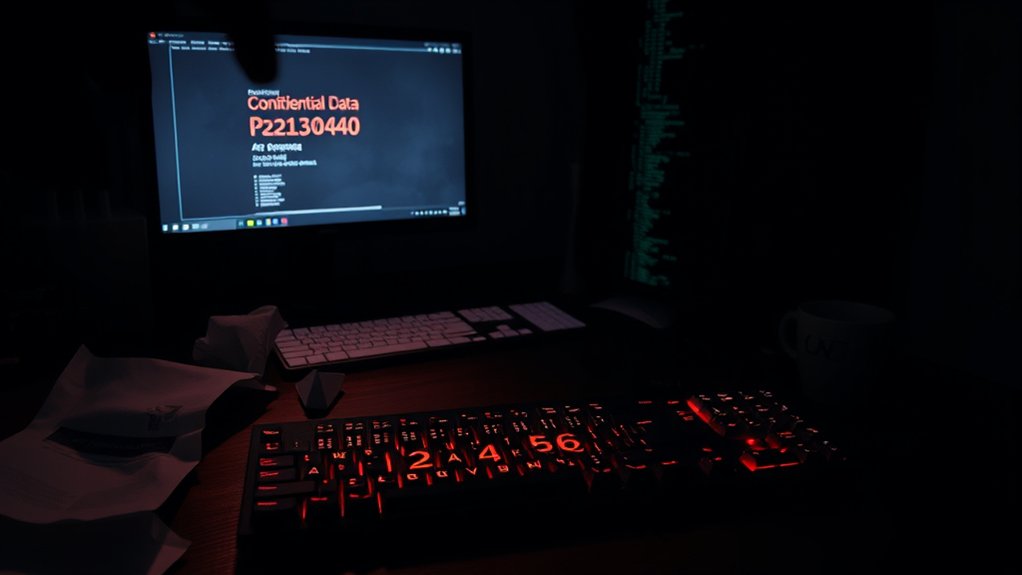

As the digital landscape evolves, McDonald’s has implemented an artificial intelligence job bot named Olivia to streamline the application process for potential employees. This system, managed by Paradox.ai, aims to improve recruitment efficiency by collecting applicants’ resumes and personal data. Nevertheless, it has become embroiled in a significant security breach that exposed the personal information of approximately 64 million applicants. Researchers Ian Carroll and Sam Curry reported that attackers exploited a basic security flaw, gaining access using the password “123456,” a notoriously weak choice that underscored inadequate cybersecurity measures. The breach highlighted vulnerabilities in AI-driven hiring processes that could endanger sensitive applicant information.

The breach has raised alarming concerns regarding the lack of multifactor authentication, leaving the system vulnerable to unauthorized access. Data compromised in this incident included sensitive chat logs and personal resumes, creating potential risks for misuse in spite of the absence of reported malicious exploitation. Furthermore, it underscored the growing trend of AI for initial job interviews, raising questions about data security. The incident exemplifies how weak credentials can create significant security gaps in even large-scale systems.

Whereas Paradox.ai has responded by locking down the system and initiating a bug bounty program to rectify these vulnerabilities, skepticism lingers about the efficacy of oversight for third-party software management. Both McDonald’s and Paradox.ai have expressed deep concern over the incident. McDonald’s does not directly manage the AI software, highlighting an important distinction in accountability.

Paradox.ai has since acknowledged their responsibility for the breach and vowed to implement stronger security practices moving forward. Stakeholders stress the need for greater oversight regarding vendor accountability, particularly concerning data protection regulations and transparency in addressing breaches.

The cybersecurity implications are significant. This incident serves as a stark reminder of basic security oversights and the crucial importance of strong cybersecurity practices within AI systems. Experts recommend adopting stringent password protocols and enabling multifactor authentication as fundamental measures to mitigate risks. As organizations increasingly rely on AI technologies, adherence to cybersecurity best practices will be critical in preventing similar occurrences in the future.